Simulated Sense of Hearing

aurellem ☉

1 Hearing

At the end of this post I will have simulated ears that work the same way as the simulated eyes in the last post. I will be able to place any number of ear-nodes in a blender file, and they will bind to the closest physical object and follow it as it moves around. Each ear will provide access to the sound data it picks up between every frame.

Hearing is one of the more difficult senses to simulate, because there is less support for obtaining the actual sound data that is processed by jMonkeyEngine3. There is no "split-screen" support for rendering sound from different points of view, and there is no way to directly access the rendered sound data.

1.1 Brief Description of jMonkeyEngine's Sound System

jMonkeyEngine's sound system works as follows:

- jMonkeyEngine uses the

AppSettingsfor the particular application to determine what sort ofAudioRenderershould be used.

- Although some support is provided for multiple AudioRendering

backends, jMonkeyEngine at the time of this writing will either

pick no

AudioRendererat all, or theLwjglAudioRenderer.- jMonkeyEngine tries to figure out what sort of system you're running and extracts the appropriate native libraries.

- The

LwjglAudioRendereruses theLWJGL(LightWeight Java Game Library) bindings to interface with a C library calledOpenAL OpenALrenders the 3D sound and feeds the rendered sound directly to any of various sound output devices with which it knows how to communicate.

A consequence of this is that there's no way to access the actual

sound data produced by OpenAL. Even worse, OpenAL only supports

one listener (it renders sound data from only one perspective),

which normally isn't a problem for games, but becomes a problem when

trying to make multiple AI creatures that can each hear the world from

a different perspective.

To make many AI creatures in jMonkeyEngine that can each hear the

world from their own perspective, or to make a single creature with

many ears, it is necessary to go all the way back to OpenAL and

implement support for simulated hearing there.

2 Extending OpenAL

2.1 OpenAL Devices

OpenAL goes to great lengths to support many different systems, all

with different sound capabilities and interfaces. It accomplishes this

difficult task by providing code for many different sound backends in

pseudo-objects called Devices. There's a device for the Linux Open

Sound System and the Advanced Linux Sound Architecture, there's one

for Direct Sound on Windows, there's even one for Solaris. OpenAL

solves the problem of platform independence by providing all these

Devices.

Wrapper libraries such as LWJGL are free to examine the system on which they are running and then select an appropriate device for that system.

There are also a few "special" devices that don't interface with any

particular system. These include the Null Device, which doesn't do

anything, and the Wave Device, which writes whatever sound it receives

to a file, if everything has been set up correctly when configuring

OpenAL.

Actual mixing of the sound data happens in the Devices, and they are the only point in the sound rendering process where this data is available.

Therefore, in order to support multiple listeners, and get the sound data in a form that the AIs can use, it is necessary to create a new Device which supports this feature.

2.2 The Send Device

Adding a device to OpenAL is rather tricky – there are five separate

files in the OpenAL source tree that must be modified to do so. I've

documented this process here for anyone who is interested.

Again, my objectives are:

- Support Multiple Listeners from jMonkeyEngine3

- Get access to the rendered sound data for further processing from clojure.

I named it the "Multiple Audio Send" Device, or Send Device for

short, since it sends audio data back to the calling application like

an Aux-Send cable on a mixing board.

Onward to the actual Device!

2.3 send.c

2.4 Header

#include "config.h" #include <stdlib.h> #include "alMain.h" #include "AL/al.h" #include "AL/alc.h" #include "alSource.h" #include <jni.h> //////////////////// Summary struct send_data; struct context_data; static void addContext(ALCdevice *, ALCcontext *); static void syncContexts(ALCcontext *master, ALCcontext *slave); static void syncSources(ALsource *master, ALsource *slave, ALCcontext *masterCtx, ALCcontext *slaveCtx); static void syncSourcei(ALuint master, ALuint slave, ALCcontext *masterCtx, ALCcontext *ctx2, ALenum param); static void syncSourcef(ALuint master, ALuint slave, ALCcontext *masterCtx, ALCcontext *ctx2, ALenum param); static void syncSource3f(ALuint master, ALuint slave, ALCcontext *masterCtx, ALCcontext *ctx2, ALenum param); static void swapInContext(ALCdevice *, struct context_data *); static void saveContext(ALCdevice *, struct context_data *); static void limitContext(ALCdevice *, ALCcontext *); static void unLimitContext(ALCdevice *); static void init(ALCdevice *); static void renderData(ALCdevice *, int samples); #define UNUSED(x) (void)(x)

The main idea behind the Send device is to take advantage of the fact that LWJGL only manages one context when using OpenAL. A context is like a container that holds samples and keeps track of where the listener is. In order to support multiple listeners, the Send device identifies the LWJGL context as the master context, and creates any number of slave contexts to represent additional listeners. Every time the device renders sound, it synchronizes every source from the master LWJGL context to the slave contexts. Then, it renders each context separately, using a different listener for each one. The rendered sound is made available via JNI to jMonkeyEngine.

To recap, the process is:

- Set the LWJGL context as "master" in the

init()method. - Create any number of additional contexts via

addContext() - At every call to

renderData()sync the master context with the slave contexts withsyncContexts() syncContexts()callssyncSources()to sync all the sources which are in the master context.limitContext()andunLimitContext()make it possible to render only one context at a time.

2.5 Necessary State

//////////////////// State typedef struct context_data { ALfloat ClickRemoval[MAXCHANNELS]; ALfloat PendingClicks[MAXCHANNELS]; ALvoid *renderBuffer; ALCcontext *ctx; } context_data; typedef struct send_data { ALuint size; context_data **contexts; ALuint numContexts; ALuint maxContexts; } send_data;

Switching between contexts is not the normal operation of a Device,

and one of the problems with doing so is that a Device normally keeps

around a few pieces of state such as the ClickRemoval array above

which will become corrupted if the contexts are not rendered in

parallel. The solution is to create a copy of this normally global

device state for each context, and copy it back and forth into and out

of the actual device state whenever a context is rendered.

2.6 Synchronization Macros

//////////////////// Context Creation / Synchronization #define _MAKE_SYNC(NAME, INIT_EXPR, GET_EXPR, SET_EXPR) \ void NAME (ALuint sourceID1, ALuint sourceID2, \ ALCcontext *ctx1, ALCcontext *ctx2, \ ALenum param){ \ INIT_EXPR; \ ALCcontext *current = alcGetCurrentContext(); \ alcMakeContextCurrent(ctx1); \ GET_EXPR; \ alcMakeContextCurrent(ctx2); \ SET_EXPR; \ alcMakeContextCurrent(current); \ } #define MAKE_SYNC(NAME, TYPE, GET, SET) \ _MAKE_SYNC(NAME, \ TYPE value, \ GET(sourceID1, param, &value), \ SET(sourceID2, param, value)) #define MAKE_SYNC3(NAME, TYPE, GET, SET) \ _MAKE_SYNC(NAME, \ TYPE value1; TYPE value2; TYPE value3;, \ GET(sourceID1, param, &value1, &value2, &value3), \ SET(sourceID2, param, value1, value2, value3)) MAKE_SYNC( syncSourcei, ALint, alGetSourcei, alSourcei); MAKE_SYNC( syncSourcef, ALfloat, alGetSourcef, alSourcef); MAKE_SYNC3(syncSource3i, ALint, alGetSource3i, alSource3i); MAKE_SYNC3(syncSource3f, ALfloat, alGetSource3f, alSource3f);

Setting the state of an OpenAL source is done with the alSourcei,

alSourcef, alSource3i, and alSource3f functions. In order to

completely synchronize two sources, it is necessary to use all of

them. These macros help to condense the otherwise repetitive

synchronization code involving these similar low-level OpenAL functions.

2.7 Source Synchronization

void syncSources(ALsource *masterSource, ALsource *slaveSource, ALCcontext *masterCtx, ALCcontext *slaveCtx){ ALuint master = masterSource->source; ALuint slave = slaveSource->source; ALCcontext *current = alcGetCurrentContext(); syncSourcef(master,slave,masterCtx,slaveCtx,AL_PITCH); syncSourcef(master,slave,masterCtx,slaveCtx,AL_GAIN); syncSourcef(master,slave,masterCtx,slaveCtx,AL_MAX_DISTANCE); syncSourcef(master,slave,masterCtx,slaveCtx,AL_ROLLOFF_FACTOR); syncSourcef(master,slave,masterCtx,slaveCtx,AL_REFERENCE_DISTANCE); syncSourcef(master,slave,masterCtx,slaveCtx,AL_MIN_GAIN); syncSourcef(master,slave,masterCtx,slaveCtx,AL_MAX_GAIN); syncSourcef(master,slave,masterCtx,slaveCtx,AL_CONE_OUTER_GAIN); syncSourcef(master,slave,masterCtx,slaveCtx,AL_CONE_INNER_ANGLE); syncSourcef(master,slave,masterCtx,slaveCtx,AL_CONE_OUTER_ANGLE); syncSourcef(master,slave,masterCtx,slaveCtx,AL_SEC_OFFSET); syncSourcef(master,slave,masterCtx,slaveCtx,AL_SAMPLE_OFFSET); syncSourcef(master,slave,masterCtx,slaveCtx,AL_BYTE_OFFSET); syncSource3f(master,slave,masterCtx,slaveCtx,AL_POSITION); syncSource3f(master,slave,masterCtx,slaveCtx,AL_VELOCITY); syncSource3f(master,slave,masterCtx,slaveCtx,AL_DIRECTION); syncSourcei(master,slave,masterCtx,slaveCtx,AL_SOURCE_RELATIVE); syncSourcei(master,slave,masterCtx,slaveCtx,AL_LOOPING); alcMakeContextCurrent(masterCtx); ALint source_type; alGetSourcei(master, AL_SOURCE_TYPE, &source_type); // Only static sources are currently synchronized! if (AL_STATIC == source_type){ ALint master_buffer; ALint slave_buffer; alGetSourcei(master, AL_BUFFER, &master_buffer); alcMakeContextCurrent(slaveCtx); alGetSourcei(slave, AL_BUFFER, &slave_buffer); if (master_buffer != slave_buffer){ alSourcei(slave, AL_BUFFER, master_buffer); } } // Synchronize the state of the two sources. alcMakeContextCurrent(masterCtx); ALint masterState; ALint slaveState; alGetSourcei(master, AL_SOURCE_STATE, &masterState); alcMakeContextCurrent(slaveCtx); alGetSourcei(slave, AL_SOURCE_STATE, &slaveState); if (masterState != slaveState){ switch (masterState){ case AL_INITIAL : alSourceRewind(slave); break; case AL_PLAYING : alSourcePlay(slave); break; case AL_PAUSED : alSourcePause(slave); break; case AL_STOPPED : alSourceStop(slave); break; } } // Restore whatever context was previously active. alcMakeContextCurrent(current); }

This function is long because it has to exhaustively go through all the

possible state that a source can have and make sure that it is the

same between the master and slave sources. I'd like to take this

moment to salute the OpenAL Reference Manual, which provides a very

good description of OpenAL's internals.

2.8 Context Synchronization

void syncContexts(ALCcontext *master, ALCcontext *slave){ /* If there aren't sufficient sources in slave to mirror the sources in master, create them. */ ALCcontext *current = alcGetCurrentContext(); UIntMap *masterSourceMap = &(master->SourceMap); UIntMap *slaveSourceMap = &(slave->SourceMap); ALuint numMasterSources = masterSourceMap->size; ALuint numSlaveSources = slaveSourceMap->size; alcMakeContextCurrent(slave); if (numSlaveSources < numMasterSources){ ALuint numMissingSources = numMasterSources - numSlaveSources; ALuint newSources[numMissingSources]; alGenSources(numMissingSources, newSources); } /* Now, slave is guaranteed to have at least as many sources as master. Sync each source from master to the corresponding source in slave. */ int i; for(i = 0; i < masterSourceMap->size; i++){ syncSources((ALsource*)masterSourceMap->array[i].value, (ALsource*)slaveSourceMap->array[i].value, master, slave); } alcMakeContextCurrent(current); }

Most of the hard work in Context Synchronization is done in

syncSources(). The only thing that syncContexts() has to worry

about is automatically creating new sources whenever a slave context

does not have the same number of sources as the master context.

2.9 Context Creation

static void addContext(ALCdevice *Device, ALCcontext *context){ send_data *data = (send_data*)Device->ExtraData; // expand array if necessary if (data->numContexts >= data->maxContexts){ ALuint newMaxContexts = data->maxContexts*2 + 1; data->contexts = realloc(data->contexts, newMaxContexts*sizeof(context_data)); data->maxContexts = newMaxContexts; } // create context_data and add it to the main array context_data *ctxData; ctxData = (context_data*)calloc(1, sizeof(*ctxData)); ctxData->renderBuffer = malloc(BytesFromDevFmt(Device->FmtType) * Device->NumChan * Device->UpdateSize); ctxData->ctx = context; data->contexts[data->numContexts] = ctxData; data->numContexts++; }

Here, the slave context is created, and it's data is stored in the

device-wide ExtraData structure. The renderBuffer that is created

here is where the rendered sound samples for this slave context will

eventually go.

2.10 Context Switching

//////////////////// Context Switching /* A device brings along with it two pieces of state * which have to be swapped in and out with each context. */ static void swapInContext(ALCdevice *Device, context_data *ctxData){ memcpy(Device->ClickRemoval, ctxData->ClickRemoval, sizeof(ALfloat)*MAXCHANNELS); memcpy(Device->PendingClicks, ctxData->PendingClicks, sizeof(ALfloat)*MAXCHANNELS); } static void saveContext(ALCdevice *Device, context_data *ctxData){ memcpy(ctxData->ClickRemoval, Device->ClickRemoval, sizeof(ALfloat)*MAXCHANNELS); memcpy(ctxData->PendingClicks, Device->PendingClicks, sizeof(ALfloat)*MAXCHANNELS); } static ALCcontext **currentContext; static ALuint currentNumContext; /* By default, all contexts are rendered at once for each call to aluMixData. * This function uses the internals of the ALCdevice struct to temporally * cause aluMixData to only render the chosen context. */ static void limitContext(ALCdevice *Device, ALCcontext *ctx){ currentContext = Device->Contexts; currentNumContext = Device->NumContexts; Device->Contexts = &ctx; Device->NumContexts = 1; } static void unLimitContext(ALCdevice *Device){ Device->Contexts = currentContext; Device->NumContexts = currentNumContext; }

OpenAL normally renders all contexts in parallel, outputting the

whole result to the buffer. It does this by iterating over the

Device->Contexts array and rendering each context to the buffer in

turn. By temporally setting Device->NumContexts to 1 and adjusting

the Device's context list to put the desired context-to-be-rendered

into position 0, we can get trick OpenAL into rendering each context

separate from all the others.

2.11 Main Device Loop

//////////////////// Main Device Loop /* Establish the LWJGL context as the master context, which will * be synchronized to all the slave contexts */ static void init(ALCdevice *Device){ ALCcontext *masterContext = alcGetCurrentContext(); addContext(Device, masterContext); } static void renderData(ALCdevice *Device, int samples){ if(!Device->Connected){return;} send_data *data = (send_data*)Device->ExtraData; ALCcontext *current = alcGetCurrentContext(); ALuint i; for (i = 1; i < data->numContexts; i++){ syncContexts(data->contexts[0]->ctx , data->contexts[i]->ctx); } if ((ALuint) samples > Device->UpdateSize){ printf("exceeding internal buffer size; dropping samples\n"); printf("requested %d; available %d\n", samples, Device->UpdateSize); samples = (int) Device->UpdateSize; } for (i = 0; i < data->numContexts; i++){ context_data *ctxData = data->contexts[i]; ALCcontext *ctx = ctxData->ctx; alcMakeContextCurrent(ctx); limitContext(Device, ctx); swapInContext(Device, ctxData); aluMixData(Device, ctxData->renderBuffer, samples); saveContext(Device, ctxData); unLimitContext(Device); } alcMakeContextCurrent(current); }

The main loop synchronizes the master LWJGL context with all the slave contexts, then iterates through each context, rendering just that context to it's audio-sample storage buffer.

2.12 JNI Methods

At this point, we have the ability to create multiple listeners by

using the master/slave context trick, and the rendered audio data is

waiting patiently in internal buffers, one for each listener. We need

a way to transport this information to Java, and also a way to drive

this device from Java. The following JNI interface code is inspired

by the LWJGL JNI interface to OpenAL.

2.12.1 Stepping the Device

//////////////////// JNI Methods #include "com_aurellem_send_AudioSend.h" /* * Class: com_aurellem_send_AudioSend * Method: nstep * Signature: (JI)V */ JNIEXPORT void JNICALL Java_com_aurellem_send_AudioSend_nstep (JNIEnv *env, jclass clazz, jlong device, jint samples){ UNUSED(env);UNUSED(clazz);UNUSED(device); renderData((ALCdevice*)((intptr_t)device), samples); }

This device, unlike most of the other devices in OpenAL, does not

render sound unless asked. This enables the system to slow down or

speed up depending on the needs of the AIs who are using it to

listen. If the device tried to render samples in real-time, a

complicated AI whose mind takes 100 seconds of computer time to

simulate 1 second of AI-time would miss almost all of the sound in

its environment.

2.12.2 Device->Java Data Transport

/* * Class: com_aurellem_send_AudioSend * Method: ngetSamples * Signature: (JLjava/nio/ByteBuffer;III)V */ JNIEXPORT void JNICALL Java_com_aurellem_send_AudioSend_ngetSamples (JNIEnv *env, jclass clazz, jlong device, jobject buffer, jint position, jint samples, jint n){ UNUSED(clazz); ALvoid *buffer_address = ((ALbyte *)(((char*)(*env)->GetDirectBufferAddress(env, buffer)) + position)); ALCdevice *recorder = (ALCdevice*) ((intptr_t)device); send_data *data = (send_data*)recorder->ExtraData; if ((ALuint)n > data->numContexts){return;} memcpy(buffer_address, data->contexts[n]->renderBuffer, BytesFromDevFmt(recorder->FmtType) * recorder->NumChan * samples); }

This is the transport layer between C and Java that will eventually allow us to access rendered sound data from clojure.

2.12.3 Listener Management

addListener, setNthListenerf, and setNthListener3f are

necessary to change the properties of any listener other than the

master one, since only the listener of the current active context is

affected by the normal OpenAL listener calls.

/* * Class: com_aurellem_send_AudioSend * Method: naddListener * Signature: (J)V */ JNIEXPORT void JNICALL Java_com_aurellem_send_AudioSend_naddListener (JNIEnv *env, jclass clazz, jlong device){ UNUSED(env); UNUSED(clazz); //printf("creating new context via naddListener\n"); ALCdevice *Device = (ALCdevice*) ((intptr_t)device); ALCcontext *new = alcCreateContext(Device, NULL); addContext(Device, new); } /* * Class: com_aurellem_send_AudioSend * Method: nsetNthListener3f * Signature: (IFFFJI)V */ JNIEXPORT void JNICALL Java_com_aurellem_send_AudioSend_nsetNthListener3f (JNIEnv *env, jclass clazz, jint param, jfloat v1, jfloat v2, jfloat v3, jlong device, jint contextNum){ UNUSED(env);UNUSED(clazz); ALCdevice *Device = (ALCdevice*) ((intptr_t)device); send_data *data = (send_data*)Device->ExtraData; ALCcontext *current = alcGetCurrentContext(); if ((ALuint)contextNum > data->numContexts){return;} alcMakeContextCurrent(data->contexts[contextNum]->ctx); alListener3f(param, v1, v2, v3); alcMakeContextCurrent(current); } /* * Class: com_aurellem_send_AudioSend * Method: nsetNthListenerf * Signature: (IFJI)V */ JNIEXPORT void JNICALL Java_com_aurellem_send_AudioSend_nsetNthListenerf (JNIEnv *env, jclass clazz, jint param, jfloat v1, jlong device, jint contextNum){ UNUSED(env);UNUSED(clazz); ALCdevice *Device = (ALCdevice*) ((intptr_t)device); send_data *data = (send_data*)Device->ExtraData; ALCcontext *current = alcGetCurrentContext(); if ((ALuint)contextNum > data->numContexts){return;} alcMakeContextCurrent(data->contexts[contextNum]->ctx); alListenerf(param, v1); alcMakeContextCurrent(current); }

2.12.4 Initialization

initDevice is called from the Java side after LWJGL has created its

context, and before any calls to addListener. It establishes the

LWJGL context as the master context.

getAudioFormat is a convenience function that uses JNI to build up a

javax.sound.sampled.AudioFormat object from data in the Device. This

way, there is no ambiguity about what the bits created by step and

returned by getSamples mean.

/* * Class: com_aurellem_send_AudioSend * Method: ninitDevice * Signature: (J)V */ JNIEXPORT void JNICALL Java_com_aurellem_send_AudioSend_ninitDevice (JNIEnv *env, jclass clazz, jlong device){ UNUSED(env);UNUSED(clazz); ALCdevice *Device = (ALCdevice*) ((intptr_t)device); init(Device); } /* * Class: com_aurellem_send_AudioSend * Method: ngetAudioFormat * Signature: (J)Ljavax/sound/sampled/AudioFormat; */ JNIEXPORT jobject JNICALL Java_com_aurellem_send_AudioSend_ngetAudioFormat (JNIEnv *env, jclass clazz, jlong device){ UNUSED(clazz); jclass AudioFormatClass = (*env)->FindClass(env, "javax/sound/sampled/AudioFormat"); jmethodID AudioFormatConstructor = (*env)->GetMethodID(env, AudioFormatClass, "<init>", "(FIIZZ)V"); ALCdevice *Device = (ALCdevice*) ((intptr_t)device); int isSigned; switch (Device->FmtType) { case DevFmtUByte: case DevFmtUShort: isSigned = 0; break; default : isSigned = 1; } float frequency = Device->Frequency; int bitsPerFrame = (8 * BytesFromDevFmt(Device->FmtType)); int channels = Device->NumChan; jobject format = (*env)-> NewObject( env,AudioFormatClass,AudioFormatConstructor, frequency, bitsPerFrame, channels, isSigned, 0); return format; }

2.13 Boring Device Management Stuff / Memory Cleanup

This code is more-or-less copied verbatim from the other OpenAL

Devices. It's the basis for OpenAL's primitive object system.

//////////////////// Device Initialization / Management static const ALCchar sendDevice[] = "Multiple Audio Send"; static ALCboolean send_open_playback(ALCdevice *device, const ALCchar *deviceName) { send_data *data; // stop any buffering for stdout, so that I can // see the printf statements in my terminal immediately setbuf(stdout, NULL); if(!deviceName) deviceName = sendDevice; else if(strcmp(deviceName, sendDevice) != 0) return ALC_FALSE; data = (send_data*)calloc(1, sizeof(*data)); device->szDeviceName = strdup(deviceName); device->ExtraData = data; return ALC_TRUE; } static void send_close_playback(ALCdevice *device) { send_data *data = (send_data*)device->ExtraData; alcMakeContextCurrent(NULL); ALuint i; // Destroy all slave contexts. LWJGL will take care of // its own context. for (i = 1; i < data->numContexts; i++){ context_data *ctxData = data->contexts[i]; alcDestroyContext(ctxData->ctx); free(ctxData->renderBuffer); free(ctxData); } free(data); device->ExtraData = NULL; } static ALCboolean send_reset_playback(ALCdevice *device) { SetDefaultWFXChannelOrder(device); return ALC_TRUE; } static void send_stop_playback(ALCdevice *Device){ UNUSED(Device); } static const BackendFuncs send_funcs = { send_open_playback, send_close_playback, send_reset_playback, send_stop_playback, NULL, NULL, /* These would be filled with functions to */ NULL, /* handle capturing audio if we we into that */ NULL, /* sort of thing... */ NULL, NULL }; ALCboolean alc_send_init(BackendFuncs *func_list){ *func_list = send_funcs; return ALC_TRUE; } void alc_send_deinit(void){} void alc_send_probe(enum DevProbe type) { switch(type) { case DEVICE_PROBE: AppendDeviceList(sendDevice); break; case ALL_DEVICE_PROBE: AppendAllDeviceList(sendDevice); break; case CAPTURE_DEVICE_PROBE: break; } }

3 The Java interface, AudioSend

The Java interface to the Send Device follows naturally from the JNI

definitions. The only thing here of note is the deviceID. This is

available from LWJGL, but to only way to get it is with reflection.

Unfortunately, there is no other way to control the Send device than

to obtain a pointer to it.

package com.aurellem.send; import java.nio.ByteBuffer; import javax.sound.sampled.AudioFormat; public class AudioSend { private final long deviceID; public AudioSend(long deviceID){ this.deviceID = deviceID; } /** This establishes the LWJGL context as the context which * will be copies to all other contexts. It must be called * before any calls to <code>addListener();</code> */ public void initDevice(){ ninitDevice(this.deviceID);} public static native void ninitDevice(long device); /** * The send device does not automatically process sound. This * step function will cause the desired number of samples to * be processed for each listener. The results will then be * available via calls to <code>getSamples()</code> for each * listener. * @param samples */ public void step(int samples){ nstep(this.deviceID, samples);} public static native void nstep(long device, int samples); /** * Retrieve the final rendered sound for a particular * listener. <code>contextNum == 0</code> is the main LWJGL * context. * @param buffer * @param samples * @param contextNum */ public void getSamples(ByteBuffer buffer, int samples, int contextNum){ ngetSamples(this.deviceID, buffer, buffer.position(), samples, contextNum);} public static native void ngetSamples(long device, ByteBuffer buffer, int position, int samples, int contextNum); /** * Create an additional listener on the recorder device. The * device itself will manage this listener and synchronize it * with the main LWJGL context. Processed sound samples for * this listener will be available via a call to * <code>getSamples()</code> with <code>contextNum</code> * equal to the number of times this method has been called. */ public void addListener(){naddListener(this.deviceID);} public static native void naddListener(long device); /** * This will internally call <code>alListener3f<code> in the * appropriate slave context and update that context's * listener's parameters. Calling this for a number greater * than the current number of slave contexts will have no * effect. * @param pname * @param v1 * @param v2 * @param v3 * @param contextNum */ public void setNthListener3f(int pname, float v1, float v2, float v3, int contextNum){ nsetNthListener3f(pname, v1, v2, v3, this.deviceID, contextNum);} public static native void nsetNthListener3f(int pname, float v1, float v2, float v3, long device, int contextNum); /** * This will internally call <code>alListenerf<code> in the * appropriate slave context and update that context's * listener's parameters. Calling this for a number greater * than the current number of slave contexts will have no * effect. * @param pname * @param v1 * @param contextNum */ public void setNthListenerf(int pname, float v1, int contextNum){ nsetNthListenerf(pname, v1, this.deviceID, contextNum);} public static native void nsetNthListenerf(int pname, float v1, long device, int contextNum); /** * Retrieve the AudioFormat which the device is using. This * format is itself derived from the OpenAL config file under * the "format" variable. */ public AudioFormat getAudioFormat(){ return ngetAudioFormat(this.deviceID);} public static native AudioFormat ngetAudioFormat(long device); }

4 The Java Audio Renderer, AudioSendRenderer

package com.aurellem.capture.audio; import java.io.IOException; import java.lang.reflect.Field; import java.nio.ByteBuffer; import java.util.HashMap; import java.util.Vector; import java.util.concurrent.CountDownLatch; import java.util.logging.Level; import java.util.logging.Logger; import javax.sound.sampled.AudioFormat; import org.lwjgl.LWJGLException; import org.lwjgl.openal.AL; import org.lwjgl.openal.AL10; import org.lwjgl.openal.ALCdevice; import org.lwjgl.openal.OpenALException; import com.aurellem.send.AudioSend; import com.jme3.audio.Listener; import com.jme3.audio.lwjgl.LwjglAudioRenderer; import com.jme3.math.Vector3f; import com.jme3.system.JmeSystem; import com.jme3.system.Natives; import com.jme3.util.BufferUtils; public class AudioSendRenderer extends LwjglAudioRenderer implements MultiListener { private AudioSend audioSend; private AudioFormat outFormat; /** * Keeps track of all the listeners which have been registered * so far. The first element is <code>null</code>, which * represents the zeroth LWJGL listener which is created * automatically. */ public Vector<Listener> listeners = new Vector<Listener>(); public void initialize(){ super.initialize(); listeners.add(null); } /** * This is to call the native methods which require the OpenAL * device ID. Currently it is obtained through reflection. */ private long deviceID; /** * To ensure that <code>deviceID<code> and * <code>listeners<code> are properly initialized before any * additional listeners are added. */ private CountDownLatch latch = new CountDownLatch(1); /** * Each listener (including the main LWJGL listener) can be * registered with a <code>SoundProcessor</code>, which this * Renderer will call whenever there is new audio data to be * processed. */ public HashMap<Listener, SoundProcessor> soundProcessorMap = new HashMap<Listener, SoundProcessor>(); /** * Create a new slave context on the recorder device which * will render all the sounds in the main LWJGL context with * respect to this listener. */ public void addListener(Listener l) { try {this.latch.await();} catch (InterruptedException e) {e.printStackTrace();} audioSend.addListener(); this.listeners.add(l); l.setRenderer(this); } /** * Whenever new data is rendered in the perspective of this * listener, this Renderer will send that data to the * SoundProcessor of your choosing. */ public void registerSoundProcessor(Listener l, SoundProcessor sp) { this.soundProcessorMap.put(l, sp); } /** * Registers a SoundProcessor for the main LWJGL context. Ig all * you want to do is record the sound you would normally hear in * your application, then this is the only method you have to * worry about. */ public void registerSoundProcessor(SoundProcessor sp){ // register a sound processor for the default listener. this.soundProcessorMap.put(null, sp); } private static final Logger logger = Logger.getLogger(AudioSendRenderer.class.getName()); /** * Instead of taking whatever device is available on the system, * this call creates the "Multiple Audio Send" device, which * supports multiple listeners in a limited capacity. For each * listener, the device renders it not to the sound device, but * instead to buffers which it makes available via JNI. */ public void initInThread(){ try{ switch (JmeSystem.getPlatform()){ case Windows64: Natives.extractNativeLib("windows/audioSend", "OpenAL64", true, true); break; case Windows32: Natives.extractNativeLib("windows/audioSend", "OpenAL32", true, true); break; case Linux64: Natives.extractNativeLib("linux/audioSend", "openal64", true, true); break; case Linux32: Natives.extractNativeLib("linux/audioSend", "openal", true, true); break; } } catch (IOException ex) {ex.printStackTrace();} try{ if (!AL.isCreated()){ AL.create("Multiple Audio Send", 44100, 60, false); } }catch (OpenALException ex){ logger.log(Level.SEVERE, "Failed to load audio library", ex); System.exit(1); return; }catch (LWJGLException ex){ logger.log(Level.SEVERE, "Failed to load audio library", ex); System.exit(1); return; } super.initInThread(); ALCdevice device = AL.getDevice(); // RLM: use reflection to grab the ID of our device for use // later. try { Field deviceIDField; deviceIDField = ALCdevice.class.getDeclaredField("device"); deviceIDField.setAccessible(true); try {deviceID = (Long)deviceIDField.get(device);} catch (IllegalArgumentException e) {e.printStackTrace();} catch (IllegalAccessException e) {e.printStackTrace();} deviceIDField.setAccessible(false);} catch (SecurityException e) {e.printStackTrace();} catch (NoSuchFieldException e) {e.printStackTrace();} this.audioSend = new AudioSend(this.deviceID); this.outFormat = audioSend.getAudioFormat(); initBuffer(); // The LWJGL context must be established as the master context // before any other listeners can be created on this device. audioSend.initDevice(); // Now, everything is initialized, and it is safe to add more // listeners. latch.countDown(); } public void cleanup(){ for(SoundProcessor sp : this.soundProcessorMap.values()){ sp.cleanup(); } super.cleanup(); } public void updateAllListeners(){ for (int i = 0; i < this.listeners.size(); i++){ Listener lis = this.listeners.get(i); if (null != lis){ Vector3f location = lis.getLocation(); Vector3f velocity = lis.getVelocity(); Vector3f orientation = lis.getUp(); float gain = lis.getVolume(); audioSend.setNthListener3f (AL10.AL_POSITION, location.x, location.y, location.z, i); audioSend.setNthListener3f (AL10.AL_VELOCITY, velocity.x, velocity.y, velocity.z, i); audioSend.setNthListener3f (AL10.AL_ORIENTATION, orientation.x, orientation.y, orientation.z, i); audioSend.setNthListenerf(AL10.AL_GAIN, gain, i); } } } private ByteBuffer buffer;; public static final int MIN_FRAMERATE = 10; private void initBuffer(){ int bufferSize = (int)(this.outFormat.getSampleRate() / ((float)MIN_FRAMERATE)) * this.outFormat.getFrameSize(); this.buffer = BufferUtils.createByteBuffer(bufferSize); } public void dispatchAudio(float tpf){ int samplesToGet = (int) (tpf * outFormat.getSampleRate()); try {latch.await();} catch (InterruptedException e) {e.printStackTrace();} audioSend.step(samplesToGet); updateAllListeners(); for (int i = 0; i < this.listeners.size(); i++){ buffer.clear(); audioSend.getSamples(buffer, samplesToGet, i); SoundProcessor sp = this.soundProcessorMap.get(this.listeners.get(i)); if (null != sp){ sp.process (buffer, samplesToGet*outFormat.getFrameSize(), outFormat);} } } public void update(float tpf){ super.update(tpf); dispatchAudio(tpf); } }

The AudioSendRenderer is a modified version of the

LwjglAudioRenderer which implements the MultiListener interface to

provide access and creation of more than one Listener object.

4.1 MultiListener.java

package com.aurellem.capture.audio; import com.jme3.audio.Listener; /** * This interface lets you: * 1.) Get at rendered 3D-sound data. * 2.) Create additional listeners which each hear * the world from their own perspective. * @author Robert McIntyre */ public interface MultiListener { void addListener(Listener l); void registerSoundProcessor(Listener l, SoundProcessor sp); void registerSoundProcessor(SoundProcessor sp); }

4.2 SoundProcessors are like SceneProcessors

A SoundProcessor is analogous to a SceneProcessor. Every frame, the

SoundProcessor registered with a given Listener receives the

rendered sound data and can do whatever processing it wants with it.

#+include "../../jmeCapture/src/com/aurellem/capture/audio/SoundProcessor.java" src java

5 Finally, Ears in clojure!

Now that the C and Java infrastructure is complete, the clojure

hearing abstraction is simple and closely parallels the vision

abstraction.

5.1 Hearing Pipeline

All sound rendering is done in the CPU, so hearing-pipeline is

much less complicated than vision-pipeline The bytes available in

the ByteBuffer obtained from the send Device have different meanings

dependent upon the particular hardware or your system. That is why

the AudioFormat object is necessary to provide the meaning that the

raw bytes lack. byteBuffer->pulse-vector uses the excellent

conversion facilities from tritonus (javadoc) to generate a clojure vector of

floats which represent the linear PCM encoded waveform of the

sound. With linear PCM (pulse code modulation) -1.0 represents maximum

rarefaction of the air while 1.0 represents maximum compression of the

air at a given instant.

(in-ns 'cortex.hearing) (defn hearing-pipeline "Creates a SoundProcessor which wraps a sound processing continuation function. The continuation is a function that takes [#^ByteBuffer b #^Integer int numSamples #^AudioFormat af ], each of which has already been appropriately sized." [continuation] (proxy [SoundProcessor] [] (cleanup []) (process [#^ByteBuffer audioSamples numSamples #^AudioFormat audioFormat] (continuation audioSamples numSamples audioFormat)))) (defn byteBuffer->pulse-vector "Extract the sound samples from the byteBuffer as a PCM encoded waveform with values ranging from -1.0 to 1.0 into a vector of floats." [#^ByteBuffer audioSamples numSamples #^AudioFormat audioFormat] (let [num-floats (/ numSamples (.getFrameSize audioFormat)) bytes (byte-array numSamples) floats (float-array num-floats)] (.get audioSamples bytes 0 numSamples) (FloatSampleTools/byte2floatInterleaved bytes 0 floats 0 num-floats audioFormat) (vec floats)))

5.2 Physical Ears

Together, these three functions define how ears found in a specially

prepared blender file will be translated to Listener objects in a

simulation. ears extracts all the children of to top level node

named "ears". add-ear! and update-listener-velocity! use

bind-sense to bind a Listener object located at the initial

position of an "ear" node to the closest physical object in the

creature. That Listener will stay in the same orientation to the

object with which it is bound, just as the camera in the sense binding

demonstration. OpenAL simulates the Doppler effect for moving

listeners, update-listener-velocity! ensures that this velocity

information is always up-to-date.

(def ^{:doc "Return the children of the creature's \"ears\" node." :arglists '([creature])} ears (sense-nodes "ears")) (defn update-listener-velocity! "Update the listener's velocity every update loop." [#^Spatial obj #^Listener lis] (let [old-position (atom (.getLocation lis))] (.addControl obj (proxy [AbstractControl] [] (controlUpdate [tpf] (let [new-position (.getLocation lis)] (.setVelocity lis (.mult (.subtract new-position @old-position) (float (/ tpf)))) (reset! old-position new-position))) (controlRender [_ _]))))) (defn add-ear! "Create a Listener centered on the current position of 'ear which follows the closest physical node in 'creature and sends sound data to 'continuation." [#^Application world #^Node creature #^Spatial ear continuation] (let [target (closest-node creature ear) lis (Listener.) audio-renderer (.getAudioRenderer world) sp (hearing-pipeline continuation)] (.setLocation lis (.getWorldTranslation ear)) (.setRotation lis (.getWorldRotation ear)) (bind-sense target lis) (update-listener-velocity! target lis) (.addListener audio-renderer lis) (.registerSoundProcessor audio-renderer lis sp)))

5.3 Ear Creation

(defn hearing-kernel "Returns a function which returns auditory sensory data when called inside a running simulation." [#^Node creature #^Spatial ear] (let [hearing-data (atom []) register-listener! (runonce (fn [#^Application world] (add-ear! world creature ear (comp #(reset! hearing-data %) byteBuffer->pulse-vector))))] (fn [#^Application world] (register-listener! world) (let [data @hearing-data topology (vec (map #(vector % 0) (range 0 (count data))))] [topology data])))) (defn hearing! "Endow the creature in a particular world with the sense of hearing. Will return a sequence of functions, one for each ear, which when called will return the auditory data from that ear." [#^Node creature] (for [ear (ears creature)] (hearing-kernel creature ear)))

Each function returned by hearing-kernel! will register a new

Listener with the simulation the first time it is called. Each time

it is called, the hearing-function will return a vector of linear PCM

encoded sound data that was heard since the last frame. The size of

this vector is of course determined by the overall framerate of the

game. With a constant framerate of 60 frames per second and a sampling

frequency of 44,100 samples per second, the vector will have exactly

735 elements.

5.4 Visualizing Hearing

This is a simple visualization function which displays the waveform reported by the simulated sense of hearing. It converts the values reported in the vector returned by the hearing function from the range [-1.0, 1.0] to the range [0 255], converts to integer, and displays the number as a greyscale pixel.

(in-ns 'cortex.hearing) (defn view-hearing "Creates a function which accepts a list of auditory data and display each element of the list to the screen as an image." [] (view-sense (fn [[coords sensor-data]] (let [pixel-data (vec (map #(rem (int (* 255 (/ (+ 1 %) 2))) 256) sensor-data)) height 50 image (BufferedImage. (max 1 (count coords)) height BufferedImage/TYPE_INT_RGB)] (dorun (for [x (range (count coords))] (dorun (for [y (range height)] (let [raw-sensor (pixel-data x)] (.setRGB image x y (gray raw-sensor))))))) image))))

6 Testing Hearing

6.1 Advanced Java Example

I wrote a test case in Java that demonstrates the use of the Java components of this hearing system. It is part of a larger java library to capture perfect Audio from jMonkeyEngine. Some of the clojure constructs above are partially reiterated in the java source file. But first, the video! As far as I know this is the first instance of multiple simulated listeners in a virtual environment using OpenAL.

YouTube

The blue sphere is emitting a constant sound. Each gray box is listening for sound, and will change color from gray to green if it detects sound which is louder than a certain threshold. As the blue sphere travels along the path, it excites each of the cubes in turn.

package com.aurellem.capture.examples; import java.io.File; import java.io.IOException; import java.lang.reflect.Field; import java.nio.ByteBuffer; import javax.sound.sampled.AudioFormat; import org.tritonus.share.sampled.FloatSampleTools; import com.aurellem.capture.AurellemSystemDelegate; import com.aurellem.capture.Capture; import com.aurellem.capture.IsoTimer; import com.aurellem.capture.audio.CompositeSoundProcessor; import com.aurellem.capture.audio.MultiListener; import com.aurellem.capture.audio.SoundProcessor; import com.aurellem.capture.audio.WaveFileWriter; import com.jme3.app.SimpleApplication; import com.jme3.audio.AudioNode; import com.jme3.audio.Listener; import com.jme3.cinematic.MotionPath; import com.jme3.cinematic.events.AbstractCinematicEvent; import com.jme3.cinematic.events.MotionTrack; import com.jme3.material.Material; import com.jme3.math.ColorRGBA; import com.jme3.math.FastMath; import com.jme3.math.Quaternion; import com.jme3.math.Vector3f; import com.jme3.scene.Geometry; import com.jme3.scene.Node; import com.jme3.scene.shape.Box; import com.jme3.scene.shape.Sphere; import com.jme3.system.AppSettings; import com.jme3.system.JmeSystem; /** * * Demonstrates advanced use of the audio capture and recording * features. Multiple perspectives of the same scene are * simultaneously rendered to different sound files. * * A key limitation of the way multiple listeners are implemented is * that only 3D positioning effects are realized for listeners other * than the main LWJGL listener. This means that audio effects such * as environment settings will *not* be heard on any auxiliary * listeners, though sound attenuation will work correctly. * * Multiple listeners as realized here might be used to make AI * entities that can each hear the world from their own perspective. * * @author Robert McIntyre */ public class Advanced extends SimpleApplication { /** * You will see three grey cubes, a blue sphere, and a path which * circles each cube. The blue sphere is generating a constant * monotone sound as it moves along the track. Each cube is * listening for sound; when a cube hears sound whose intensity is * greater than a certain threshold, it changes its color from * grey to green. * * Each cube is also saving whatever it hears to a file. The * scene from the perspective of the viewer is also saved to a * video file. When you listen to each of the sound files * alongside the video, the sound will get louder when the sphere * approaches the cube that generated that sound file. This * shows that each listener is hearing the world from its own * perspective. * */ public static void main(String[] args) { Advanced app = new Advanced(); AppSettings settings = new AppSettings(true); settings.setAudioRenderer(AurellemSystemDelegate.SEND); JmeSystem.setSystemDelegate(new AurellemSystemDelegate()); app.setSettings(settings); app.setShowSettings(false); app.setPauseOnLostFocus(false); try { //Capture.captureVideo(app, File.createTempFile("advanced",".avi")); Capture.captureAudio(app, File.createTempFile("advanced",".wav")); } catch (IOException e) {e.printStackTrace();} app.start(); } private Geometry bell; private Geometry ear1; private Geometry ear2; private Geometry ear3; private AudioNode music; private MotionTrack motionControl; private IsoTimer motionTimer = new IsoTimer(60); private Geometry makeEar(Node root, Vector3f position){ Material mat = new Material(assetManager, "Common/MatDefs/Misc/Unshaded.j3md"); Geometry ear = new Geometry("ear", new Box(1.0f, 1.0f, 1.0f)); ear.setLocalTranslation(position); mat.setColor("Color", ColorRGBA.Green); ear.setMaterial(mat); root.attachChild(ear); return ear; } private Vector3f[] path = new Vector3f[]{ // loop 1 new Vector3f(0, 0, 0), new Vector3f(0, 0, -10), new Vector3f(-2, 0, -14), new Vector3f(-6, 0, -20), new Vector3f(0, 0, -26), new Vector3f(6, 0, -20), new Vector3f(0, 0, -14), new Vector3f(-6, 0, -20), new Vector3f(0, 0, -26), new Vector3f(6, 0, -20), // loop 2 new Vector3f(5, 0, -5), new Vector3f(7, 0, 1.5f), new Vector3f(14, 0, 2), new Vector3f(20, 0, 6), new Vector3f(26, 0, 0), new Vector3f(20, 0, -6), new Vector3f(14, 0, 0), new Vector3f(20, 0, 6), new Vector3f(26, 0, 0), new Vector3f(20, 0, -6), new Vector3f(14, 0, 0), // loop 3 new Vector3f(8, 0, 7.5f), new Vector3f(7, 0, 10.5f), new Vector3f(6, 0, 20), new Vector3f(0, 0, 26), new Vector3f(-6, 0, 20), new Vector3f(0, 0, 14), new Vector3f(6, 0, 20), new Vector3f(0, 0, 26), new Vector3f(-6, 0, 20), new Vector3f(0, 0, 14), // begin ellipse new Vector3f(16, 5, 20), new Vector3f(0, 0, 26), new Vector3f(-16, -10, 20), new Vector3f(0, 0, 14), new Vector3f(16, 20, 20), new Vector3f(0, 0, 26), new Vector3f(-10, -25, 10), new Vector3f(-10, 0, 0), // come at me! new Vector3f(-28.00242f, 48.005623f, -34.648228f), new Vector3f(0, 0 , -20), }; private void createScene() { Material mat = new Material(assetManager, "Common/MatDefs/Misc/Unshaded.j3md"); bell = new Geometry( "sound-emitter" , new Sphere(15,15,1)); mat.setColor("Color", ColorRGBA.Blue); bell.setMaterial(mat); rootNode.attachChild(bell); ear1 = makeEar(rootNode, new Vector3f(0, 0 ,-20)); ear2 = makeEar(rootNode, new Vector3f(0, 0 ,20)); ear3 = makeEar(rootNode, new Vector3f(20, 0 ,0)); MotionPath track = new MotionPath(); for (Vector3f v : path){ track.addWayPoint(v); } track.setCurveTension(0.80f); motionControl = new MotionTrack(bell,track); // for now, use reflection to change the timer... // motionControl.setTimer(new IsoTimer(60)); try { Field timerField; timerField = AbstractCinematicEvent.class.getDeclaredField("timer"); timerField.setAccessible(true); try {timerField.set(motionControl, motionTimer);} catch (IllegalArgumentException e) {e.printStackTrace();} catch (IllegalAccessException e) {e.printStackTrace();} } catch (SecurityException e) {e.printStackTrace();} catch (NoSuchFieldException e) {e.printStackTrace();} motionControl.setDirectionType (MotionTrack.Direction.PathAndRotation); motionControl.setRotation (new Quaternion().fromAngleNormalAxis (-FastMath.HALF_PI, Vector3f.UNIT_Y)); motionControl.setInitialDuration(20f); motionControl.setSpeed(1f); track.enableDebugShape(assetManager, rootNode); positionCamera(); } private void positionCamera(){ this.cam.setLocation (new Vector3f(-28.00242f, 48.005623f, -34.648228f)); this.cam.setRotation (new Quaternion (0.3359635f, 0.34280345f, -0.13281013f, 0.8671653f)); } private void initAudio() { org.lwjgl.input.Mouse.setGrabbed(false); music = new AudioNode(assetManager, "Sound/Effects/Beep.ogg", false); rootNode.attachChild(music); audioRenderer.playSource(music); music.setPositional(true); music.setVolume(1f); music.setReverbEnabled(false); music.setDirectional(false); music.setMaxDistance(200.0f); music.setRefDistance(1f); //music.setRolloffFactor(1f); music.setLooping(false); audioRenderer.pauseSource(music); } public class Dancer implements SoundProcessor { Geometry entity; float scale = 2; public Dancer(Geometry entity){ this.entity = entity; } /** * this method is irrelevant since there is no state to cleanup. */ public void cleanup() {} /** * Respond to sound! This is the brain of an AI entity that * hears its surroundings and reacts to them. */ public void process(ByteBuffer audioSamples, int numSamples, AudioFormat format) { audioSamples.clear(); byte[] data = new byte[numSamples]; float[] out = new float[numSamples]; audioSamples.get(data); FloatSampleTools. byte2floatInterleaved (data, 0, out, 0, numSamples/format.getFrameSize(), format); float max = Float.NEGATIVE_INFINITY; for (float f : out){if (f > max) max = f;} audioSamples.clear(); if (max > 0.1){ entity.getMaterial().setColor("Color", ColorRGBA.Green); } else { entity.getMaterial().setColor("Color", ColorRGBA.Gray); } } } private void prepareEar(Geometry ear, int n){ if (this.audioRenderer instanceof MultiListener){ MultiListener rf = (MultiListener)this.audioRenderer; Listener auxListener = new Listener(); auxListener.setLocation(ear.getLocalTranslation()); rf.addListener(auxListener); WaveFileWriter aux = null; try { aux = new WaveFileWriter (File.createTempFile("advanced-audio-" + n, ".wav"));} catch (IOException e) {e.printStackTrace();} rf.registerSoundProcessor (auxListener, new CompositeSoundProcessor(new Dancer(ear), aux)); } } public void simpleInitApp() { this.setTimer(new IsoTimer(60)); initAudio(); createScene(); prepareEar(ear1, 1); prepareEar(ear2, 1); prepareEar(ear3, 1); motionControl.play(); } public void simpleUpdate(float tpf) { motionTimer.update(); if (music.getStatus() != AudioNode.Status.Playing){ music.play(); } Vector3f loc = cam.getLocation(); Quaternion rot = cam.getRotation(); listener.setLocation(loc); listener.setRotation(rot); music.setLocalTranslation(bell.getLocalTranslation()); } }

Here is a small clojure program to drive the java program and make it available as part of my test suite.

(in-ns 'cortex.test.hearing) (defn test-java-hearing "Testing hearing: You should see a blue sphere flying around several cubes. As the sphere approaches each cube, it turns green." [] (doto (com.aurellem.capture.examples.Advanced.) (.setSettings (doto (AppSettings. true) (.setAudioRenderer "Send"))) (.setShowSettings false) (.setPauseOnLostFocus false)))

6.2 Adding Hearing to the Worm

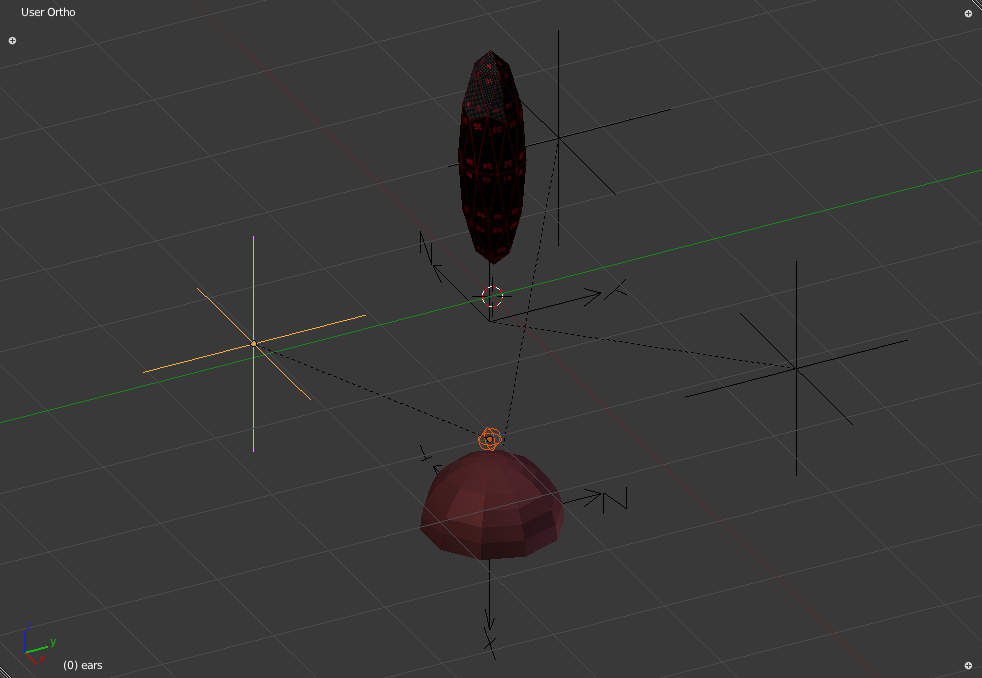

To the worm, I add a new node called "ears" with one child which represents the worm's single ear.

Figure 1: The Worm with a newly added nodes describing an ear.

The node highlighted in yellow it the top-level "ears" node. It's child, highlighted in orange, represents a the single ear the creature has. The ear will be localized right above the curved part of the worm's lower hemispherical region opposite the eye.

The other empty nodes represent the worm's single joint and eye and are described in body and vision.

(in-ns 'cortex.test.hearing) (defn test-worm-hearing "Testing hearing: You will see the worm fall onto a table. There is a long horizontal bar which shows the waveform of whatever the worm is hearing. When you play a sound, the bar should display a waveform. Keys: <enter> : play sound l : play hymn" ([] (test-worm-hearing false)) ([record?] (let [the-worm (doto (worm) (body!)) hearing (hearing! the-worm) hearing-display (view-hearing) tone (AudioNode. (asset-manager) "Sounds/pure.wav" false) hymn (AudioNode. (asset-manager) "Sounds/ear-and-eye.wav" false)] (world (nodify [the-worm (floor)]) (merge standard-debug-controls {"key-return" (fn [_ value] (if value (.play tone))) "key-l" (fn [_ value] (if value (.play hymn)))}) (fn [world] (light-up-everything world) (let [timer (IsoTimer. 60)] (.setTimer world timer) (display-dilated-time world timer)) (if record? (do (com.aurellem.capture.Capture/captureVideo world (File. "/home/r/proj/cortex/render/worm-audio/frames")) (com.aurellem.capture.Capture/captureAudio world (File. "/home/r/proj/cortex/render/worm-audio/audio.wav"))))) (fn [world tpf] (hearing-display (map #(% world) hearing) (if record? (File. "/home/r/proj/cortex/render/worm-audio/hearing-data"))))))))

In this test, I load the worm with its newly formed ear and let it hear sounds. The sound the worm is hearing is localized to the origin of the world, and you can see that as the worm moves farther away from the origin when it is hit by balls, it hears the sound less intensely.

The sound you hear in the video is from the worm's perspective. Notice how the pure tone becomes fainter and the visual display of the auditory data becomes less pronounced as the worm falls farther away from the source of the sound.

YouTube

The worm can now hear the sound pulses produced from the hymn. Notice the strikingly different pattern that human speech makes compared to the instruments. Once the worm is pushed off the floor, the sound it hears is attenuated, and the display of the sound it hears becomes fainter. This shows the 3D localization of sound in this world.

6.2.1 Creating the Ear Video

(ns cortex.video.magick3 (:import java.io.File) (:use clojure.java.shell)) (defn images [path] (sort (rest (file-seq (File. path))))) (def base "/home/r/proj/cortex/render/worm-audio/") (defn pics [file] (images (str base file))) (defn combine-images [] (let [main-view (pics "frames") hearing (pics "hearing-data") background (repeat 9001 (File. (str base "background.png"))) targets (map #(File. (str base "out/" (format "%07d.png" %))) (range 0 (count main-view)))] (dorun (pmap (comp (fn [[background main-view hearing target]] (println target) (sh "convert" background main-view "-geometry" "+66+21" "-composite" hearing "-geometry" "+21+526" "-composite" target)) (fn [& args] (map #(.getCanonicalPath %) args))) background main-view hearing targets))))

cd /home/r/proj/cortex/render/worm-audio ffmpeg -r 60 -i out/%07d.png -i audio.wav \ -b:a 128k -b:v 9001k \ -c:a libvorbis -c:v -g 60 libtheora worm-hearing.ogg

7 Headers

(ns cortex.hearing "Simulate the sense of hearing in jMonkeyEngine3. Enables multiple listeners at different positions in the same world. Automatically reads ear-nodes from specially prepared blender files and instantiates them in the world as simulated ears." {:author "Robert McIntyre"} (:use (cortex world util sense)) (:import java.nio.ByteBuffer) (:import java.awt.image.BufferedImage) (:import org.tritonus.share.sampled.FloatSampleTools) (:import (com.aurellem.capture.audio SoundProcessor AudioSendRenderer)) (:import javax.sound.sampled.AudioFormat) (:import (com.jme3.scene Spatial Node)) (:import com.jme3.audio.Listener) (:import com.jme3.app.Application) (:import com.jme3.scene.control.AbstractControl))

(ns cortex.test.hearing (:use (cortex world util hearing body)) (:use cortex.test.body) (:import (com.jme3.audio AudioNode Listener)) (:import java.io.File) (:import com.jme3.scene.Node com.jme3.system.AppSettings com.jme3.math.Vector3f) (:import (com.aurellem.capture Capture IsoTimer RatchetTimer)))

8 Source Listing

9 Next

The worm can see and hear, but it can't feel the world or itself. Next post, I'll give the worm a sense of touch.